Introduction

With the public preview of Azure Data Lake Analytics and U-SQL scripting language becoming available, doing big data processing has become simpler and less daunting for the non-hadooper. Besides the simplicity, the ability to query external data sources such as Azure SQL Database, Azure SQL Data Warehouse and SQL running on Azure VM, from within a U-SQL script, creates a very compelling case to jump on board. The focus of this post is my experience with getting that external connection up and running with Azure SQL Database serving as my external source.

Prerequisites

- You will need an Azure Subscription. Free Trial

- Visual Studio. Visual Studio Community 2015

- Azure Data Lake Tools for Visual Studio

- Azure SQL Database

- (ADLS)Azure Data Lake Store (May take some time to be accepted into preview.)

- (ADLA)Azure Data Lake Analytics (May take some time to be accepted into preview.)

Azure Data lake

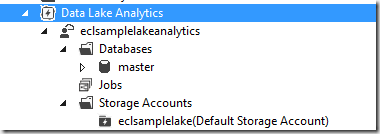

The first thing you will need to do is create a database in Azure Data Lake. The simplest way to do that is through Visual Studio and the Azure Data Lake Tools. In Visual Studio server explorer you will see a little blue cloud icon, this will allow you to connect to your Azure subscription. Once you authenticate a Data Lake Analytics node will appear in the Azure resources tree.

Right click on the Databases folder and create a new database. This database will be used by ADLA to store the schema of the external resource you are trying to query. We will circle back to that later, for now give it a name that is representative of the external data source you are planning on querying. For the purpose of this example I have an Azure SQL Database called SampleDB, so I will name the Azure Data Lake database SampleDBExternal.

Powershell

We now need to utilize PowerShell to begin the connection process to the external data source.

Please gather the following information:

- Azure SQL Database

- Server Name – <name>.database.windows.net

- Username – examplesa

- Password – examplePa$$w0rd

- Azure Data Lake

- Azure Data Lake Analytics Account Name – eclsamplelakeanalytics

- Database Name – SampleDBExternal

Once you have gathered that information we can begin with making sure your PowerShell environment has the necessary modules.

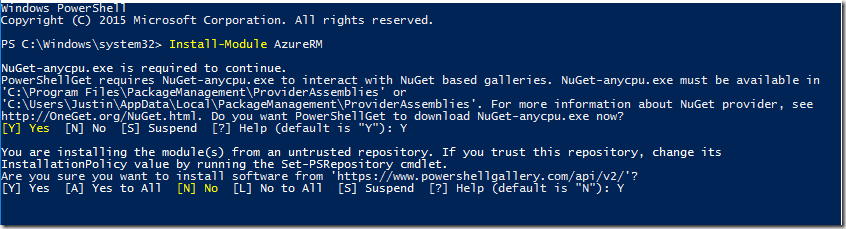

Open PowerShell as an administrator and type in Install-Module AzureRM.

If you have not already done this you will be prompted to install PowerShellGet, which connects to PowerShell Gallery (NuGet for PowerShell). Once you enter Yes for both options the AzureRM module will be available.

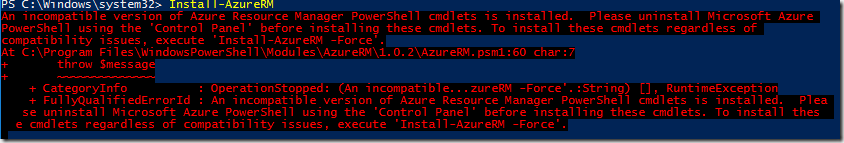

Next type Install-AzureRM. If you see an error like below just follow the instructions on uninstalling Microsoft Azure PowerShell.

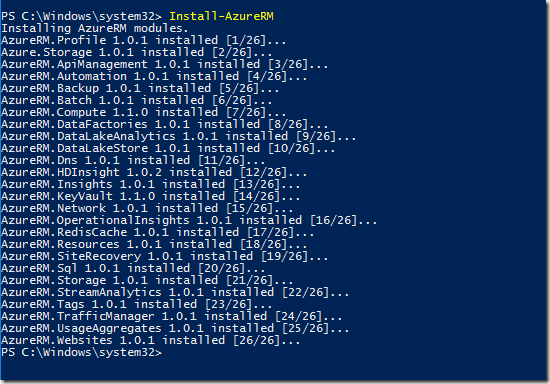

The command Install-AzureRM will display the following if successful. Notice it pulls in AzureRM.DataLakeAnalytics and AzureRM.DataLakeStore.

Now that PowerShell is setup with AzureRM you will need to Login to your Azure subscription which contains your Azure Data Lake accounts. Login-AzureRmAccount will pop up the authentication window, enter your Azure subscription credentials.

Login-AzureRmAccount Get-AzureRmSubscription Set-AzureRmContext –SubscriptionID

After supplying your Azure credentials and setting the context to the subscription which holds your Azure Data Lake accounts, you will now need the information you gathered above for the next commands. The following commands are used to create a credential object for your external data source in the Azure Data Lake Analytics account that is named.

$passwd = ConvertTo-SecureString “examplePa$$w0rd” -AsPlainText –Force

$mysecret = New-Object System.Management.Automation.PSCredential("ExampleSecret", $passwd)

New-AzureDataLakeAnalyticsCatalogSecret –Database “SampleDBExternal” –Host sample.database.windows.net -Port 1433 -Secret $mysecret –AccountName “eclsamplelakeanalytics”

Azure SQL Database

You will need to allow an IP range in the Azure SQL Database firewall. The Data Lake Analytics services are running from a range of IPs 25.66.0.0 to 25.66.255.255. I am not sure if they will be adding more IP ranges in the future, but you will receive a server connection error when the U-SQL script attempts to query the external data source if the IPs are not allowed.

Visual Studio – U-SQL Project

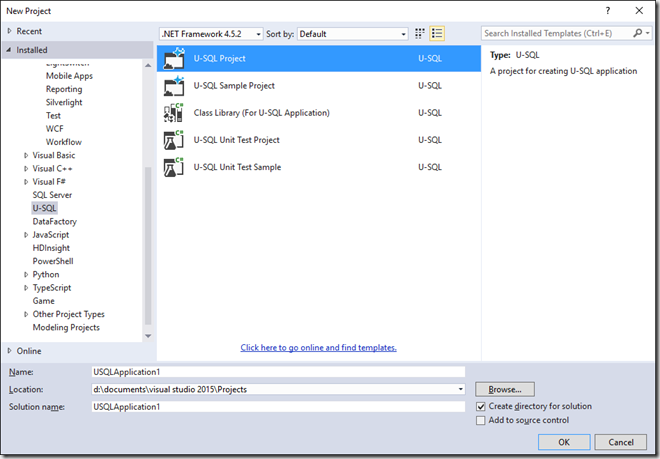

Finally we can make use of the previous setup in a U-SQL script. Open Visual Studio and create a new U-SQL project.

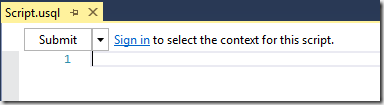

If you have not signed into your Azure subscription within visual studio, you will notice a Sign In link at the top of the newly created U-SQL script file.

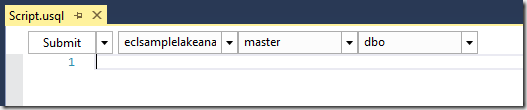

After you successfully sign in you will see any Data Lake Analytic accounts and corresponding databases you have available.

The first part of the U-SQL script we need to define is the credential to be used when accessing the external data source. The database context should be set to the same database used when we executed the New-AzureDataLakeAnalyticsCatalogSecret command in PowerShell. Once the context is set we can use the CREATE CREDENTIAL U-SQL statement to define the credential, passing in the Azure SQL Database username. (U-SQL Credential Object Documentation)

USE DATABASE SampleDBExternal;

CREATE CREDENTIAL IF NOT EXISTS ExampleCred

WITH USER_NAME = "examplesa",

IDENTITY = "ExampleSecret";

Next we use the U-SQL credential to create our DATA SOURCE object. This is where we have the option of creating an AZURESQLDB / SQLSERVER / AZURESQLDW data source connection. The other optional parameter PROVIDER_STRING allows you to pass additional ADO.NET connection string options. I found I was unable to create my external table without specifying the Initial Catalog. (U-SQL Data Source Object Documentation)

CREATE DATA SOURCE IF NOT EXISTS SampleSource FROM AZURESQLDB WITH (CREDENTIAL = ExampleCred, PROVIDER_STRING = "Initial Catalog=SampleDB;");

Now we can use the data source object SampleSource to create our External Table. An external table is a meta data object where the data exists outside of U-SQL, in this case Azure SQL Database. (U-SQL Table Documentation)

CREATE EXTERNAL TABLE IF NOT EXISTS dbo.LocalExampleTable

(

Id Guid,

Name string

)

FROM SampleSource LOCATION "[dbo].[SourceExampleTable]";

With the external table definition created, we can now query it as if it were local to our ADLA account.

@test =

SELECT *

FROM dbo.LocalExampleTable;

OUTPUT @test

TO "/Output/TestQuery_AzureSqlDb.csv"

USING Outputters.Csv();

Conclusion

Recap:

- Create database in Azure Data Lake account to store external data source credentials and external table schemas.

- Use PowerShell to create CatalogSecret credential to external data source.

- Allow IP range in Azure SQL Database firewall so Azure Data Lake Analytics will be able to execute remote queries.

- Define Credential Objects, Data Source Objects and External Table definition in U-SQL script.

Without a doubt there is a fair amount of upfront work that needs to be done to get these Azure services talking to each other. However, the simple fact that this type of connection can be accomplished, helps to tie the Azure Big Data ecosystem together.