When using EF Core with PostgreSQL for a case-insensitive “LIKE” search, you can leverage PostgreSQL-specific features to achieve the desired behavior. PostgreSQL supports the ILIKE operator, which performs a case-insensitive pattern matching operation. Here’s an example of how to perform a case-insensitive “LIKE” search using EF Core and PostgreSQL:

Helpful C# – Partitioner

In C#, the Partitioner class is part of the System.Collections.Concurrent namespace and is used to partition data into smaller chunks for parallel processing. It provides an easy way to divide work among multiple tasks or threads in a parallel algorithm.

Here’s an example of how to use the Partitioner class:

Helpful C# – OfType

In C#, the OfType method is used to filter a collection and retrieve only the elements that are of a specific type or that can be cast to a particular type. It is part of LINQ (Language-Integrated Query) and can be used with collections that implement the IEnumerable interface.

The syntax for using OfType is as follows:

Workaround – Google Sign-In without Google+ API with MVC .NET and Owin

A few years back I created a small custom application for a client that utilized their Google logins for authentication. The web application was written with ASP.NET MVC and utilized Katana/Owin pipeline. The common practice to setup that application with Google sign in was to also enable the Google+ API. If you have done this then like me you have received an email recently that explains, as of March 2019 the Google+ API’s will be shut down. I have spent the last few days trying to read through the documentation to understand what needs to be done to fix this, without completely switching up the current login flow, however they don’t seem to make this transition easy for .NET applications. Thankfully I finally found the answer I was looking for, a workaround posted in GitHub comments, to address this exact issue. For those of you who are in the same boat as me have a look at this comment . I have made the changes recommended here and I can verify that my Google Sign-In is now working again without the Google+ API enabled.

Change Default Swagger Route in an ASP.Net Core Web Api

Introduction

Over the past few weeks I have been doing some work with ASP.Net Core Web Api project’s using swagger. The setup was pretty standard until the api’s needed to be deployed to staging and production environments. The web api’s are being hosted in docker containers behind a reverse proxy, the staging and production environments required a prefix route parameter for each api. This meant that the default url’s for the controllers and swagger would need to include a route prefix. To add a route prefix to swagger and swagger ui is a pretty quick code change. Continue reading “Change Default Swagger Route in an ASP.Net Core Web Api”

Starting an Azure Data Factory Pipeline from C# .Net

Introduction

Azure Data Factory (ADF) does an amazing job orchestrating data movement and transformation activities between cloud sources with ease. Sometimes you may also need to reach into your on-premises systems to gather data, which is also possible with ADF through data management gateways. However, you may run into a situation where you already have local processes running or you cannot run a specific process in the cloud, but you still want to have a ADF pipeline dependent on the data being processed locally. For example you may have an ETL process that begins with a locally run process that stores data in Azure Data Lake. Once that process is completed you want the ADF pipeline to being processing that data and any other activities or pipelines to follow. The key is starting the ADF pipeline only after the local process has completed. This post will highlight how to accomplish this through the use of the Data Factory Management API.

Prerequisites

- You will need an Azure Subscription. Free Trial

- Visual Studio. Visual Studio Community 2015

- A Data Factory you want to manually start from .Net

Continue reading “Starting an Azure Data Factory Pipeline from C# .Net”

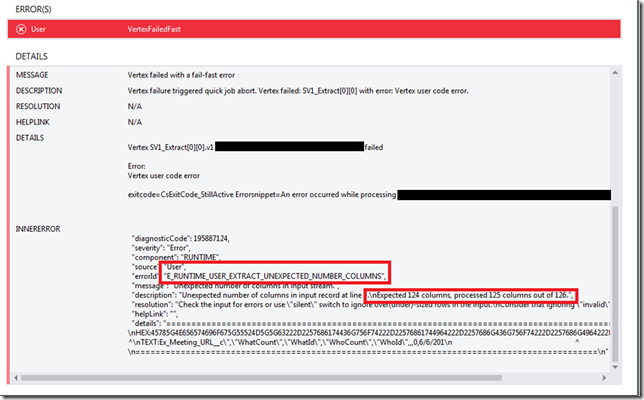

The Lesser Known Resolution to the Unexpected Number of Columns Error Executing U-SQL on Azure Data Lake Analytics

Problem

Azure Data Lake Store (ADLS) gives you the ability to store all raw data in one location readily accessible for analysis. In my particular case I am pulling data from Salesforce and using the ADLS .net SDK to store the results in the data lake. If anyone has worked with Salesforce they know that it is possible to have an object with hundreds of custom fields, this leads to a file being stored in ADLS with hundreds of columns. One of the first translations I wanted to use U-SQL and Azure Data Lake Analytics (ADLA) for was to only evaluate a subset of the data by querying a few columns from the hundreds that might exist in a file.

An example script might look like this:

@tasks_raw =

EXTRACT AccountId string,

Id string,

OwnerId string,

Status string,

Subject string,

....

.... More Fields ....,

....

WhatCount int?,

WhatId string,

WhoCount int?,

WhoId string

FROM "/RawData/Salesforce/Task/2016/01/25/Task.csv"

USING Extractors.Csv();

@tasks_subset =

SELECT AccountId,

ActivityDate,

CreatedById,

CreatedDate,

Id,

OwnerId,

Status

FROM @tasks_raw;

OUTPUT @tasks_subset

TO "/Subset/Salesforce/Task/2016/01/25/Task.csv"

USING Outputters.Csv();

The first step is to impose a schema on top of the current file by using the “Extract” syntax, there by storing that into a variable. Then from the newly created variable I can select only a subset of columns which I need for later processing to store. The common problem I have run into is with the Extract portion of this script. I have frequently received the Unexpected Number of Columns error as seen in the screen shot below.

Solution

The most common cause of this error, especially when the input file is a CSV, is a “comma” in one of the data cells. After I completed all possible sanitization of the data before writing it to ADLS using the .net SDK I still received this error.

I began a dialog with the Azure Data Lake team, who informed me that using the ADLS .net SDK to create and append data to a file in ADLS could be causing a column alignment issue. If you are using the .net SDK to create a file and then append data to it, be aware there is a 4 MB limit on the request. This means that if you send a request with N number of rows and that batch is over 4 MB, the request will terminate in the middle of the record and not in a record boundary delimiter. The solution here is simple, I needed to add some logic to batch the rows to be stored in 4 MB or less chunks, ensuring that the final row would end on a record boundary.

Some sample code would look like this:

public void StoreData(string path, List rows, bool append)

{

var buffer = new MemoryStream();

var sw = new StreamWriter(buffer);

foreach (var row in rows)

{

if (buffer.Length + Encoding.UTF8.GetByteCount(row) > FOURMB)

{

buffer.Position = 0;

if (append)

{

execute_append(path, buffer);

}

else

{

execute_create(path, buffer);

append = true;

}

buffer = new MemoryStream();

sw = new StreamWriter(buffer);

}

sw.Write(row);

sw.Flush();

}

if (buffer.Length <= 0) return;

buffer.Position = 0;

if (append)

{

execute_append(path, buffer);

}

else

{

execute_create(path, buffer);

}

}

private AzureOperationResponse execute_create(string path, MemoryStream ms)

{

var beginCreateResponse = inner_client.FileSystem.BeginCreate(path, DataLakeAppConfig.DataLakeAccountName, new FileCreateParameters());

var createResponse = inner_client.FileSystem.Create(beginCreateResponse.Location, ms);

return createResponse;

}

private AzureOperationResponse execute_append(string path, MemoryStream ms)

{

var beginAppendResponse = inner_client.FileSystem.BeginAppend(path, DataLakeAppConfig.DataLakeAccountName, null);

var appendResponse = inner_client.FileSystem.Append(beginAppendResponse.Location, ms);

return appendResponse;

}

Conclusion

While working with any type of delimited file you no doubt have run into this unexpected number of columns error. This problem is exacerbated by the fact that you may have hundreds of columns in the file which makes it very difficult to track down. If you are using the ADLS SDK to store files in the data lake and you feel you have thoroughly gone through all other possible solutions, give this a shot. Either way it might be worth changing your storing pattern to avoid this problem on a future data file.