Below are links to questions I have contributed to on StackOverflow. If you have time or any of the questions interest you take a moment to review the question and answers. If you feel one of the answers appropriately answer the question, then please vote that answer up to better serve the community and future users looking for an answer to a similar question.

StackOverflow October 2016 Contributions

Below are links to questions I have contributed to on StackOverflow. If you have time or any of the questions interest you take a moment to review the question and answers. If you feel one of the answers appropriately answer the question, then please vote that answer up to better serve the community and future users looking for an answer to a similar question.

StackOverflow September 2016 Contributions

Below are links to questions I have contributed to on StackOverflow. If you have time or any of the questions interest you take a moment to review the question and answers. If you feel one of the answers appropriately answer the question, then please vote that answer up to better serve the community and future users looking for an answer to a similar question.

StackOverflow August 2016 Contributions

Below are links to questions I have contributed to on StackOverflow. If you have time or any of the questions interest you take a moment to review the question and answers. If you feel one of the answers appropriately answer the question, then please vote that answer up to better serve the community and future users looking for an answer to a similar question.

Iterate Over the Results of an Azure SQL Stored Procedure in an Azure Logic App

Introduction

If you need to quickly create a business workflow or other B2B integration, look no further then Azure Logic Apps. Azure Logic Apps is a cloud based workflow and integration service. With the multitude of built in connectors you can easily automate many common business workflows. The Azure SQL Database connector and execute stored procedure action were of interest to me when I needed to implement an automated email notification. The output of a stored procedure from a source system would provide me a list of users who would receive reminder emails. Azure Logic Apps provides the capability of iterating over results of an action, however iterating over the results of a stored procedure is not as straight forward as some of the other examples. In this post I am going to use the automating email notifications example to illustrate what I needed to do to iterate over the results of a stored procedure. Continue reading “Iterate Over the Results of an Azure SQL Stored Procedure in an Azure Logic App”

StackOverflow July 2016 Contributions

Below are links to questions I have contributed to on StackOverflow. If you have time or any of the questions interest you take a moment to review the question and answers. If you feel one of the answers appropriately answer the question, then please vote that answer up to better serve the community and future users looking for an answer to a similar question.

- JSON as source dataset in Data Factory Azure

- Azure Data Factory – how to structure slices for erratically arriving blobs

- Does the output dataset really matter for HDInsightHive type of activity?

- Custom .net azure-data factory activity

- Best practices for loading billions of data and appending newly updated records in PowerBI

- Power BI Embedded, updating a dashboard

- How Direct Query Report works

- Azure SQL DB to Azure SQL DB replication

- Can you hard code an Azure AD login into an application?

- Power BI Embedded – Embedding Tiles

StackOverflow June 2016 Contributions

Below are links to questions I have contributed to on StackOverflow. If you have time or any of the questions interest you take a moment to review the question and answers. If you feel one of the answers appropriately answer the question, then please vote that answer up to better serve the community and future users looking for an answer to a similar question.

- PowerBI Embedded API functionality

- Power BI report template

- Creating multiple objects in one shot within ADF

- Does redeploying existing running pipeline re-process Ready time slices again?

- Issue publishing report to Power BI Workspace

- How to remove pipelines from ADF?

- Single Sign-On When Using PowerBI REST API

- power bi and azure Table live refresh

- Authorizing Data Lake linked services through Visual Studio Data Factory Project

Running an Azure Data Factory Pipeline on a Weekday Schedule Using an Azure Function

Introduction

I have written a few posts about different aspects of Azure Data Factory. I use it as the main workhorse of my data integration and ETL projects. One major drawback I have found with Azure Data Factory is the scheduling system, it’s not as flexible as I and many others would like it to be. With that being said there are certainly ways to adapt and get more control of an Azure Data Factory pipeline execution. In my post Starting an Azure Data Factory Pipeline from C# .Net, I outline the need to kick off a pipeline after a local job has completed and how this can be attained by utilizing the SDK to programmatically set the pipelines Start/End dates. You may not have that requirement specifically, but let’s say you want to only run a pipeline during the weekday or another specific schedule, this can be accomplished by utilizing the same code from my prior post and scheduling a local console app. However, I thought it would be more fun to utilize Azure Functions to kick off a pipeline on a weekday schedule to provide a fully cloud based solution. Continue reading “Running an Azure Data Factory Pipeline on a Weekday Schedule Using an Azure Function”

Run Oracle RightNow Analytics Report with C#

Introduction

In a previous post Get All Users from Oracle RightNow SOAP Api with C#, I gave a simple example of how to get object data using the QueryCSV method of the api. There is another helpful method available on the Oracle RightNow Api which allows you to run an Analytics Report and receive a csv result set. By having the ability to execute these reports from the api provides you the opportunity to structure the data in a form that directly meets your needs.

Prerequisites

- You will need access to a Oracle RightNow instance

- Oracle RightNow report defined

- Visual Studio. Visual Studio Community 2015

- Create a new console application

- Add service reference to RightNow (follow previous post here)

Visual Studio

In order to run the following sample method, you will need to have a service reference defined for the Oracle RightNow system you would like to access. I briefly went through this in the previous post so please follow up there on how to accomplish that.

private static void run_report(int analyticsReportId)

{

var analyticsReport = new AnalyticsReport();

var reportID = new ID

{

id = analyticsReportId,

idSpecified = true

};

analyticsReport.ID = reportID;

//analyticsReport.Filters = new[]

//{

// new AnalyticsReportFilter

// {

// Name = "UpdatedRange",

// Operator = new NamedID {ID = new ID {id = 9, idSpecified = true}},

// Values =

// new[]

// {

// $"{new DateTime(2016, 01, 20, 00, 00, 00).ToString("s")}Z",

// $"{new DateTime(2016, 01, 20, 23, 59, 59).ToString("s")}Z"

// }

// }

//};

byte[] fileData;

var _client = new RightNowSyncPortClient();

_client.ClientCredentials.UserName.UserName = "";

_client.ClientCredentials.UserName.Password = "";

var clientInfoHeader = new ClientInfoHeader { AppID = "Download Analytics Report data" };

var tableSet = new CSVTableSet();

try

{

tableSet = _client.RunAnalyticsReport(clientInfoHeader, analyticsReport, 10000, 0, ",", false, true, out fileData);

}

catch (Exception e)

{

Console.WriteLine(e.Message);

throw;

}

var tableResults = tableSet.CSVTables;

var data = tableResults[0].Rows.ToArray();

}

As you can see it is pretty simple to execute the analytics report on the RightNow api. When calling the QueryCSV function you need to define a SOQL query, here we need to populate the AnalyticsReport object to be executed. Reports that require filters need to populate the filter array on the AnalyticsReport object, you can see an example of a date range filter commented out in the method above. Finally, the returned values from the report utilize the same result objects returned from the QueryCSV method.

Conclusion

For my current integration projects, I have the need to pull data from various api’s, having the ability to pull data already structured helped to speed up the process. Hopefully seeing this sample and knowing that this ability exists will provide another option for gathering data from the Oracle RightNow api.

Azure Data Factory Copy Activity Storage Failure Error

I was recently tasked with migrating local integration jobs into the cloud. The first job I began to tackle was an integration job between Salesforce and a local financial system. The integration workflow is to first download the newest entries of a specific object from salesforce, then to push that into an on premise sql server staging table. Once the data is copied locally another job within the financial system will process the staged data.

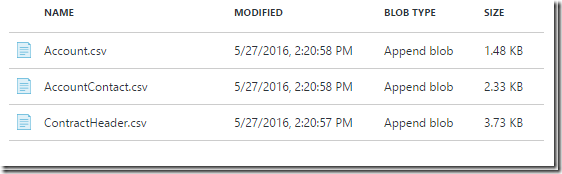

In order to accomplish this task, I decided to use Azure Data Factory, with the on premise gateway to connect to the local sql database. If you have seen some of my other posts I have used Azure Data Lake Store to land my data, with this pipeline I decided to use Azure Blob Storage. Since the Azure Blob Storage api has the ability to store Append Blobs, I was able to follow a similar pattern as I followed using the Azure Data Lake Store and append the Salesforce data to a blob as I fetched the data.

Once I had some sample Append Blobs in my container my next step was to setup the Azure Data Factory copy activity to get that data transferred to the on premise sql server staging tables. This was where I began to run into issues. After a lot of verification and testing, it turns out Append Blobs are not supported in Azure Data Factory.

Here are a few things to look out for to rule out this issue:

- In the blob container blade, it will show the BlobType, check the type of the blobs you are trying to work with in Azure Data Factory.

- I also ran into an issue where the data set which was pointing to the AppendBlob would not validate.

- When running the Azure Data Factory copy activity against an Append Blob you will see the following error:

Copy activity met storage operation failure at ‘Source’ side. Error message from storage execution: Requested value ‘AppendBlob’ was not found.

This can be a bit misleading if you are not aware AppendBlobs are not supported.