Azure Data Factory is a cloud based, scalable orchestration service. Currently I am using Azure Data Factory (ADF) to coordinate and schedule a large scale ETL process. Building a data factory is a pretty easy process, consisting of various JSON definition files, representing linked services, data sets and pipelines connected together to perform an action. For simple processes it is not very difficult to create the JSON definition files directly in the Azure Portal. However, when you are dealing with very large complex processes that you are trying to orchestrate together, it can become a bit unruly to have to manage and deploy all of those files manually.

Visual Studio

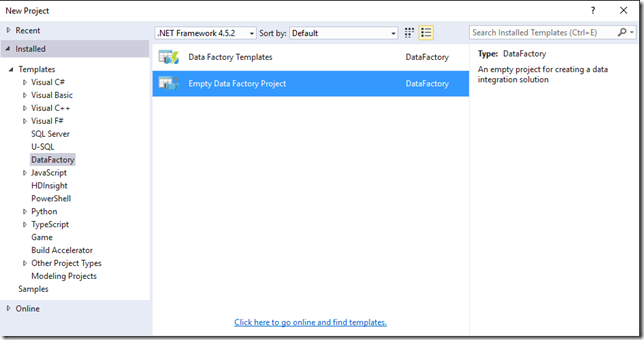

Thankfully you can install Azure Data Factory Tools for visual studio. Installing this extension gives you a data factory project template and the ability to publish directly to the portal.

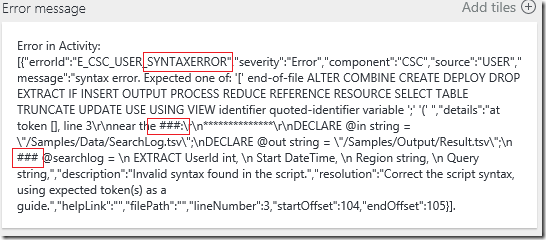

With that being said I have occasionally had issues publishing from Visual Studio, thus the reason for writing this post. Just like everything else we can use some simple PowerShell scripts to deploy ADF JSON definition files. These are very basic scripts to push updates to the data factory, they will not validate the JSON script or do any reference validation that the data factory tools will perform. I mainly use the data set portion of the script because that is where the largest number of files exist.

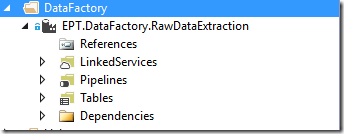

I still use visual studio and an ADF project to do the authoring of my JSON scripts, the script files are organized into LinkedService, Pipeline and Table folders.

One drawback I have with the ADF project template is that the actual file location does not mirror this same folder structure visible in Visual Studio. When looking in the actual project folder on disk you will see all the JSON scripts mixed together. With all scripts landing in a single folder I have found that following a verbose naming convention is very helpful.

I use a naming convention as follows:

- LinkedService_[Type of Service Abbreviation]_[Service Name]

- For example if I have an Azure Data Lake Storage account called SampleDataLake the file name would be “LinkedService_ADLS_SampleDataLake”

- DataSet_[Type of Data Set]_[DataSet Name]

- For example if I have an Azure Sql Data Warehouse with an Employee Dimenision table named Employee_D the file name would be “DataSet_ASDW_Employee_D”

- Pipeline_[Description]

- For example if I had a pipeline which created my employee dimension the file would be named “Pipeline_Employee_Dimension_ETL”

As you can see this is a very explicit naming convention to easily convey what that JSON script is meant to do. This also is very helpful when writing the PowerShell that will loop over specific types of files to deploy.

PowerShell Scripts

The first script is to get your PowerShell session authenticated with the Azure account you would like to deploy your data factory to.

Add-AzureRmAccount

After you are authenticated, you can now create your actual data factory.

New-AzureRmDataFactory -ResourceGroupName "ADF" -Name "SampleFactory" -Location "WestUS"

Once you have created the data factory you can now use that same resource group name and factory name to deploy the rest of the files. Please remember to do them in the following order, Linked Services then Data Sets and last Pipelines. By following the naming convention above you can see the scripts below taking advantage of some simple filtering on the name of the file to deploy the JSON files based on the type they define. The following 3 loops beginning with the Linked Services, deploy each file to the newly created data factory from the project folder.

foreach($file in Get-ChildItem "[ProjectFolder]" -filter "LinkedService*")

{

New-AzureRmDataFactoryLinkedService -ResourceGroupName "ADF" -DataFactoryName "SampleFactory" -Name $file.BaseName -File $file.FullName -Force | Format-List

}

foreach($file in Get-ChildItem "[ProjectFolder]" -filter "DataSet*")

{

New-AzureRmDataFactoryDataset -ResourceGroupName "ADF" -DataFactoryName "SampleFactory" -Name $file.BaseName -File $file.FullName -Force | Format-List

}

foreach($file in Get-ChildItem "[ProjectFolder]" -filter "Pipeline*")

{

New-AzureRmDataFactoryPipeline -ResourceGroupName "ADF" -DataFactoryName "SampleFactory" -Name $file.BaseName -File $file.FullName -Force | Format-List

}

Conclusion

Azure Data Factory is a great service for orchestrating large scale jobs. I would absolutely recommend getting the ADF tooling for visual studio it is definitely a step up from trying to do all the authoring within the Azure Portal. The goal of this post is not to dissuade the use of the tooling, but to provide a quick solution to deploying the scripts to the Azure Portal.