Problem

While working with Azure Data Lake Analytics(ADLA) and Azure Data Factory(ADF) I ran into a strange syntax error when trying to run a DataLakeAnalyticsU-SQL activity in ADF. Before integrating the script with ADF I always test the script first by submitting the job directly to ADLA. From Visual Studio I created a new U-SQL project, wrote the desired script and then submitted the job to ADLA. The script ran correctly, at this point I was ready to run the script with ADF (Run U-SQL script on ADLA from ADF).

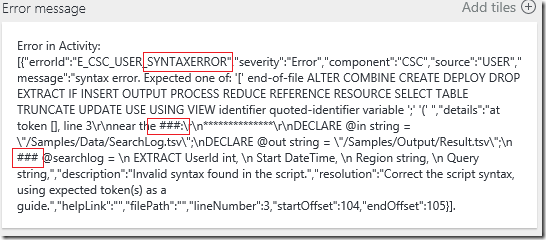

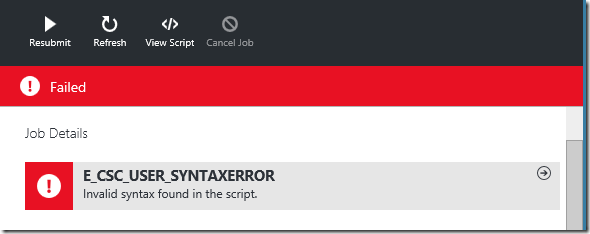

The syntax error I received looked something like this:

Solution

Fortunately I have been in contact with some of the ADF and ADL team members, who have been excellent in helping to resolve any issues I run into.

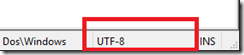

The syntax error seemed to be originating from the process that takes the script file from Azure Blob Storage and adds the parameters defined in Azure Data Factory activity, to compile the complete script to execute. The culprit is when the script file is pulled from Azure Blob Storage a Byte Order Mark (BOM) is appearing as an invalid character in the beginning of the script. I was uploading the U-SQL file created in visual studio which has an encoding of UTF-8-BOM. To get around this issue, you can create a new file in Notepad++ or Notepad, specifying UTF-8 w/o BOM (Notepad++ default), and upload that to Blob Storage for the ADF activity to use.

To verify that this might be your issue, you can open up the script file in Notepad++ and look at the bottom corner to see the file encoding.

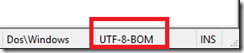

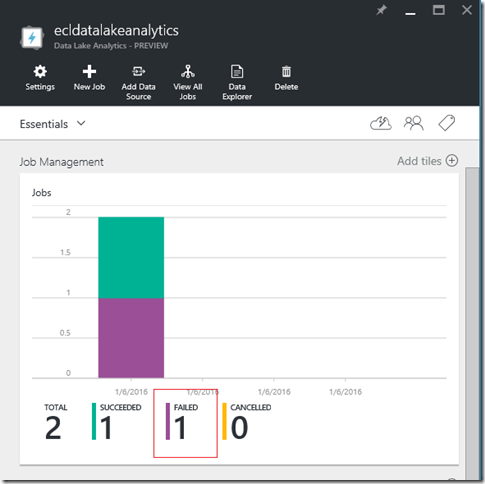

Another way to verify the actual script that was executed is through the Azure Portal and the Azure Data Analytics blade.

Open the Azure Data Analytics blade.

Click on the failed jobs to see the ADF job that failed. The job id will be prefixed with “ADF”. Once you select the specific job, open that blade and you will see this at the top:

Select “View Script” to see the actual script generated by the ADF activity and executed.

Notice the red box with the invalid ASCII character at the beginning of the script that you uploaded to blob storage.

Conclusion

If you have an issue similar to the one I have outlined here please make sure to check the encoding on the file. Then go into the actual Data Lake Analytics blade and look at exactly what script is being executed, being able to see the actual execution script is an invaluable tool that can be used to troubleshoot any issues that crop up.