The Better .NET Unit Tests with AutoFixture: Get Started course by Jason Roberts, takes you through the need for and use of AutoFixture. AutoFixture is an open source library that helps you to create anonymous test data to accelerate your unit testing time frame. The ability that AutoFixture provides to quickly and easily generate anonymous test data is also a great addition to moving towards TDD. I am definitely a proponent of utilizing a TDD methodology, a tool like AutoFixture certainly helps to reduce the resistance by making the data setup much easier. This course will get you up and running with AutoFixture quickly, by taking you through all of the basic and some important advanced usages you may need to understand to get AutoFixture integrated into your project. Continue reading “Pluralsight Notes – Better .NET Unit Tests with AutoFixture: Get Started”

Pluralsight Notes – Effective C# Unit Testing for Enterprise Applications

Introduction

Pluralsight is a great online resource for Developer/Technology training courses. I have recently begun working through a bunch of courses and I thought I would begin sharing my notes I take while viewing these courses. Effective C# Unit Testing for Enterprise Applications by Rusty Divine, aims to improve the viewers unit testing knowledge through solid patterns and practices to follow and understanding what to avoid. Continue reading “Pluralsight Notes – Effective C# Unit Testing for Enterprise Applications”

Run Oracle RightNow Analytics Report with C#

Introduction

In a previous post Get All Users from Oracle RightNow SOAP Api with C#, I gave a simple example of how to get object data using the QueryCSV method of the api. There is another helpful method available on the Oracle RightNow Api which allows you to run an Analytics Report and receive a csv result set. By having the ability to execute these reports from the api provides you the opportunity to structure the data in a form that directly meets your needs.

Prerequisites

- You will need access to a Oracle RightNow instance

- Oracle RightNow report defined

- Visual Studio. Visual Studio Community 2015

- Create a new console application

- Add service reference to RightNow (follow previous post here)

Visual Studio

In order to run the following sample method, you will need to have a service reference defined for the Oracle RightNow system you would like to access. I briefly went through this in the previous post so please follow up there on how to accomplish that.

private static void run_report(int analyticsReportId)

{

var analyticsReport = new AnalyticsReport();

var reportID = new ID

{

id = analyticsReportId,

idSpecified = true

};

analyticsReport.ID = reportID;

//analyticsReport.Filters = new[]

//{

// new AnalyticsReportFilter

// {

// Name = "UpdatedRange",

// Operator = new NamedID {ID = new ID {id = 9, idSpecified = true}},

// Values =

// new[]

// {

// $"{new DateTime(2016, 01, 20, 00, 00, 00).ToString("s")}Z",

// $"{new DateTime(2016, 01, 20, 23, 59, 59).ToString("s")}Z"

// }

// }

//};

byte[] fileData;

var _client = new RightNowSyncPortClient();

_client.ClientCredentials.UserName.UserName = "";

_client.ClientCredentials.UserName.Password = "";

var clientInfoHeader = new ClientInfoHeader { AppID = "Download Analytics Report data" };

var tableSet = new CSVTableSet();

try

{

tableSet = _client.RunAnalyticsReport(clientInfoHeader, analyticsReport, 10000, 0, ",", false, true, out fileData);

}

catch (Exception e)

{

Console.WriteLine(e.Message);

throw;

}

var tableResults = tableSet.CSVTables;

var data = tableResults[0].Rows.ToArray();

}

As you can see it is pretty simple to execute the analytics report on the RightNow api. When calling the QueryCSV function you need to define a SOQL query, here we need to populate the AnalyticsReport object to be executed. Reports that require filters need to populate the filter array on the AnalyticsReport object, you can see an example of a date range filter commented out in the method above. Finally, the returned values from the report utilize the same result objects returned from the QueryCSV method.

Conclusion

For my current integration projects, I have the need to pull data from various api’s, having the ability to pull data already structured helped to speed up the process. Hopefully seeing this sample and knowing that this ability exists will provide another option for gathering data from the Oracle RightNow api.

Accessing the Office 365 Reporting Service using C#

Introduction

As with other cloud offerings from Microsoft, there is so much data and meta data being collected there is often a need for reporting on that information. Within Office 365 administration portal you can see the various reports that are offered, however it may be necessary to pull that data into your own application or reporting tool. Thankfully you can access that underlying data through Powershell commandlets or by using the Office 365 Reporting service. This post will focus on a few simple example methods I used to explore the Office 365 Reporting service using C#.

Prerequisites

- Visual Studio. Visual Studio Community 2015

- Office 365 Administrator Account

Visual Studio

I have uploaded the full source to github so I will not be posting the full example methods in this post, if you would like to download the full source you can get it here. Each method has local variables for your Office 365 administrator account username and password. One of the example methods I would like to highlight is the run_all_reports method. This was probably the most helpful method when exploring the different reports. This allowed me to quickly loop through each report available and dump the first set of results to an xml file. I could then inspect each report result to see what data was available and which report data I needed to pull into my own application.

public void run_all_reports()

{

var username = "";

var password = "";

foreach (var report in Reports.ReportList)

{

var ub = new UriBuilder("https", "reports.office365.com");

ub.Path = string.Format("ecp/reportingwebservice/reporting.svc/{0}", report);

var fullRestURL = Uri.EscapeUriString(ub.Uri.ToString());

var request = (HttpWebRequest)WebRequest.Create(fullRestURL);

request.Credentials = new NetworkCredential(username, password);

try

{

var response = (HttpWebResponse)request.GetResponse();

var encode = System.Text.Encoding.GetEncoding("utf-8");

var readStream = new StreamReader(response.GetResponseStream(), encode);

var doc = new XmlDocument();

doc.LoadXml(readStream.ReadToEnd());

doc.Save($@"C:\Office365\Reports\{DateTime.Now:yyyyMMdd}_{report}.xml");

Console.WriteLine("Saved: {0}", report);

}

catch (Exception ex)

{

Console.WriteLine(ex.Message);

}

}

}

The get_report_list method simple hits the service endpoint and grabs the definition of all the available reports in the api. Note that depending on your permission level you may not see all the reports available. Finally, the run_report_messagetrace method is an example of pulling one report and mapping it back to an object using the HttpClient and Newtonsoft Json libraries.

Conclusion

With any cloud offering today there will generally be some reporting capabilities built in, however these reports are usually not enough. Luckily more and more services are exposing their source data through api’s. The Office 365 reporting service is just one example of that and from the sample methods above you can see how quickly it is to get access to this data.

Get All Users from Oracle RightNow SOAP Api with C#

Introduction

This post will be another example from my various system integration work. Like in a previous post about getting users from the jira api, here I will give a simple example of utilizing the RightNow SOAP Api to get a list of all users. RightNow does have a REST Api, however I have no control over the instance I must integrate with and unfortunately it is disabled. It has certainly been a bit challenging extracting data from the RightNow Api, hopefully this example will be a simple jumping off point to help you explore the other objects in the api. Here is a link to the documentation I have used when accessing the RightNow SOAP Api.

Prerequisites

- You will need access to a Oracle RightNow instance

- Visual Studio. Visual Studio Community 2015

- Create a new console application

Visual Studio

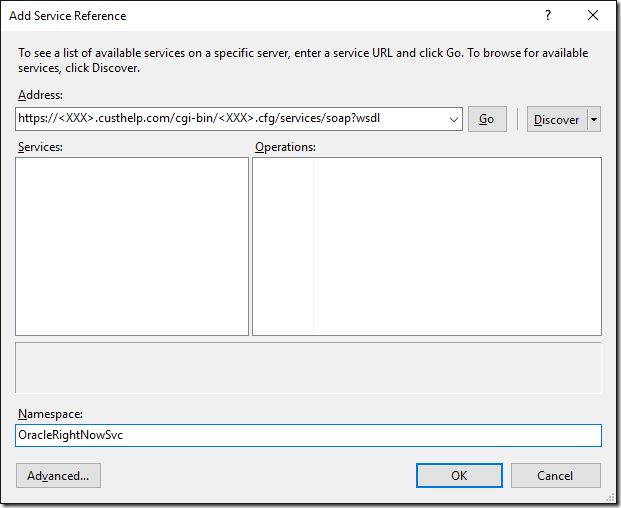

Once you have a new console app created, the first thing you will want to do since this is a SOAP Api, is right click on references and Add Service Reference (Gasp! Can’t remember the last time I did that).

Enter the address of the Oracle RightNow instance you need to connect to, replacing <XXX> in the url https://<XXX>.custhelp.com/cgi-bin/<XXX>.cfg/services/soap?wsdl.

Now that we have the service reference we can begin making queries against the SOAP API. There are methods available to fetch data from the api, the object query or tabular query. In this example I could have used the object query because it is a simple query, however I always use the tabular query (CSV Query) because the tabular query allows for more complicated ROQL queries. In general, you will most likely be writing more complicated queries to gather data anyway.

public static void GetUsers()

{

var _client = new RightNowSyncPortClient();

_client.ClientCredentials.UserName.UserName = "";

_client.ClientCredentials.UserName.Password = "";

ClientInfoHeader clientInfoHeader = new ClientInfoHeader();

clientInfoHeader.AppID = "CSVUserQuery";

var queryString = @"SELECT

Account.ID,

Account.LookupName,

Account.CreatedTime,

Account.UpdatedTime,

Account.Country,

Account.Country.Name,

Account.DisplayName,

Account.Manager,

Account.Manager.Name,

Account.Name.First,

Account.Name.Last

FROM Account;";

try

{

byte[] csvTables;

CSVTableSet queryCSV = _client.QueryCSV(clientInfoHeader, queryString, 10000, ",", false, true, out csvTables);

var dataList = new List();

foreach (CSVTable table in queryCSV.CSVTables)

{

System.Console.WriteLine("Name: " + table.Name);

System.Console.WriteLine("Columns: " + table.Columns);

String[] rowData = table.Rows;

foreach (String data in rowData)

{

dataList.Add(data);

System.Console.WriteLine("Row Data: " + data);

}

}

//File.WriteAllLines(@"C:\Accounts.csv", dataList.ToArray());

Console.ReadLine();

}

catch (FaultException ex)

{

Console.WriteLine(ex.Code);

Console.WriteLine(ex.Message);

}

catch (SoapException ex)

{

Console.WriteLine(ex.Code);

Console.WriteLine(ex.Message);

}

}

In the RightNow API the Account object represents the user in the system. The first step here is to setup the soap service client, by passing in the credentials in order to authenticate with the soap service. Once the client is configured to make the call you pass in a query for the object you would like to get back in CSV format, in this case the Account object. Once the result comes back you can iterate through the rows within the table.

Also note that the result is a CSVTableSet this is important because you can define a query with multiple statements, this will return a result table for each statement.

An example might be something like this:

var queryString = @"SELECT

Account.ID,

Account.LookupName,

Account.CreatedTime,

Account.UpdatedTime,

Account.Country,

Account.Country.Name,

Account.DisplayName,

Account.Manager,

Account.Manager.Name,

Account.Name.First,

Account.Name.Last

FROM Account;

SELECT

Account.ID,

Emails.EmailList.Address,

Emails.EmailList.AddressType,

Emails.EmailList.AddressType.Name,

Emails.EmailList.Certificate,

Emails.EmailList.Invalid

FROM Account;

SELECT

Account.ID,

Phones.PhoneList.Number,

Phones.PhoneList.PhoneType,

Phones.PhoneList.PhoneType.Name,

Phones.PhoneList.RawNumber

FROM Account;";

Conclusion

Getting the users from the Oracle RightNow api is a simple example to get up and running, while also getting some exposure to ROQL and the api.

Accessing Salesforce Reports and Dashboards REST API Using C#

Introduction

If you have read any of my other posts, you know I have been doing work with the Salesforce REST API. I recently had a need to access the Salesforce Reports and Dashboards REST API using C#. While spiking out a simple example to access the Reports and Dashboards REST API I did not come across very much documentation on how to accomplish this. In this post I will walk through a quick spike on how to authenticate with the api and how to call it to get a report. Full code sample can be found Here on GitHub.

Prerequisites

- Salesforce Organization – Sign Up Here

- A Salesforce Connected App

- Visual Studio. Visual Studio Community 2015

Visual Studio

With any access to a Salesforce API you will need a user account (username, password, token) and the consumer key/secret combination from the custom connected app. With these pieces of information, we can begin by creating a simple console application to spike out access to the reports and dashboards api. Next we need to install the following nuget packages:

Once these packages are installed we can utilize them to create a function to access the Salesforce reports and dashboards api.

var sf_client = new Salesforce.Common.AuthenticationClient(); sf_client.ApiVersion = "v34.0"; await sf_client.UsernamePasswordAsync(consumerKey, consumerSecret, username, password + usertoken, url);

Here we are taking advantage of some of the common utilities in the DeveloperForce package to create an authclient which will get us our access token from the Salesforce api. We will need that token next to start making requests to the api. Unfortunately, the DeveloperForce library does not have the ability to call the reports and dashboards api, we are just using it here easily get the access token. This all could be done using RestSharp but its simpler to utilize what has already been built.

string reportUrl = "/services/data/" + sf_client.ApiVersion + "/analytics/reports/" + reportId;

var client = new RestSharp.RestClient(sf_client.InstanceUrl);

var request = new RestSharp.RestRequest(reportUrl, RestSharp.Method.GET);

request.AddHeader("Authorization", "Bearer " + sf_client.AccessToken);

var restResponse = client.Execute(request);

var reportData = restResponse.Content;

Since we have used the DeveloperForce package to setup the authentication we can now use RestSharp and the access token to query the report api. In the code above we setup a RestSharp client with the Salesforce url, followed by defining the actual request for the report we want to execute. To make the request we also need to push the Salesforce access token onto the header and now we can make the request to receive the report data.

Conclusion

As described this is a pretty simple example on how to accomplish authentication and requesting a report from the Salesforce reports and dashboards rest api using c#. Hopefully this can be a jumping off point for accessing this data. The one major limitation for me is the api only returns 2,000 records, this is especially frustrating if your Salesforce org has a lot of data. In the near future I will be writing a companion post on how to get around this limitation.

Upgrading to Microsoft.Azure.Management.DataLake.Store 0.10.1-preview to Access Azure Data Lake Store Using C#

Introduction

Microsoft recently released a new nuget package to programmatically access the Azure Data Lake Store. In a previous post Accessing Azure Data Lake Store from an Azure Data Factory Custom .Net Activity I am utilizing Microsoft.Azure.Management.DataLake.StoreFileSystem 0.9.6-preview to programmatically access the data lake using C#. In this post I will go through what needs to be changed with my previous code to upgrade to the new nuget package. I will also include a new version of the DataLakeHelper class which uses the updated sdk.

Upgrade Path

Since I already have a sample project utilizing the older sdk (Microsoft.Azure.Management.DataLake.StoreFileSystem 0.9.6-preview), I will use that as an example on what needs to be modified to use the updated nuget package (Microsoft.Azure.Management.DataLake.Store 0.10.1-preview).

The first step is to remove all packages which supported the obsolete sdk. Here is the list of all packages that can be removed:

- Hyak.Common

- Microsoft.Azure.Common

- Microsoft.Azure.Common.Dependencies

- Microsoft.Azure.Management.DataLake.StoreFileSystem

- Microsoft.Bcl

- Microsoft.Bcl.Async

- Microsoft.Bcl.Build

- Microsoft.Net.Http

All of these dependencies are needed when using the DataLake.StoreFileSystem package. In my previous sample I am also using Microsoft.Azure.Management.DataFactories in order to create a custom activity for Azure Data Factory, unfortunately this package has a dependency on all of the above packages as well. Please be careful removing these packages as your own applications might have other dependencies on those listed above. In order to show that these packages are no longer needed my new sample project is just a simple console application using the modified DataLakeHelper class, which can be found here on github.

Now let’s go through the few changes that need to be made to the DataLakeHelper class in order to use the new nuget package. The following functions from the original DataLakeHelper class will need to be modified:

create_adls_client() execute_create(string path, MemoryStream ms) execute_append(string path, MemoryStream ms)

Here is the original code for create_adls_client():

private void create_adls_client()

{

var authenticationContext = new AuthenticationContext($"https://login.windows.net/{tenant_id}");

var credential = new ClientCredential(clientId: client_id, clientSecret: client_key);

var result = authenticationContext.AcquireToken(resource: "https://management.core.windows.net/", clientCredential: credential);

if (result == null)

{

throw new InvalidOperationException("Failed to obtain the JWT token");

}

string token = result.AccessToken;

var _credentials = new TokenCloudCredentials(subscription_id, token);

inner_client = new DataLakeStoreFileSystemManagementClient(_credentials);

}

In order to upgrade to the new sdk, there are 2 changes that need to be made.

- The DataLakeStoreFileSystemManagementClient requires a ServiceClientCredentials object

- You must set the azure subscription id on the newly created client

The last 2 lines should now look like this:

var _credentials = new TokenCredentials(token); inner_client = new DataLakeStoreFileSystemManagementClient(_credentials); inner_client.SubscriptionId = subscription_id;

Now that we can successfully authenticate again with the Azure Data Lake Store, the next change is to the create and append methods.

Here is the original code for execute_create(string path, MemoryStream ms) and execute_append(string path, MemoryStream ms):

private AzureOperationResponse execute_create(string path, MemoryStream ms)

{

var beginCreateResponse = inner_client.FileSystem.BeginCreate(path, adls_account_name, new FileCreateParameters());

var createResponse = inner_client.FileSystem.Create(beginCreateResponse.Location, ms);

Console.WriteLine("File Created");

return createResponse;

}

private AzureOperationResponse execute_append(string path, MemoryStream ms)

{

var beginAppendResponse = inner_client.FileSystem.BeginAppend(path, adls_account_name, null);

var appendResponse = inner_client.FileSystem.Append(beginAppendResponse.Location, ms);

Console.WriteLine("Data Appended");

return appendResponse;

}

The change for both of these methods is pretty simple, the BeginCreate and BeginAppend methods are no longer available and the new Create and Append methods now take in the path and Azure Data Lake Store account name.

With the changes applied the new methods are as follows:

private void execute_create(string path, MemoryStream ms)

{

inner_client.FileSystem.Create(path, adls_account_name, ms, false);

Console.WriteLine("File Created");

}

private void execute_append(string path, MemoryStream ms)

{

inner_client.FileSystem.Append(path, ms, adls_account_name);

Console.WriteLine("Data Appended");

}

Conclusion

As you can see it was not difficult to upgrade to the new version of the sdk. Unfortunately, since these are all preview bits changes like this can happen, hopefully this sdk has found its new home and it won’t go through too many more breaking changes for the end user.

Get All Users from JIRA REST API with C#

Introduction

I have been doing a lot of work integrating with various systems, which leads to the need to utilize many varying api’s. One common data point I inevitably need to pull from the target system is a list of all users. I have recently been working with the JIRA REST API and unfortunately there is no single method to get a list of all users. In this post I will provide a simple example in C# utilizing the /rest/api/2/user/search method to gather the list of users.

Prerequisites

- You will need access to a JIRA instance with the REST API enabled

- Visual Studio. Visual Studio Community 2015

- Create a new console application

- Install Atlassian.SDK nuget package

Visual Studio

First create a simple user object to model the json data being returned from the api.

public class User

{

public bool Active { get; set; }

public string DisplayName { get; set; }

public string EmailAddress { get; set; }

public string Key { get; set; }

public string Locale { get; set; }

public string Name { get; set; }

public string Self { get; set; }

public string TimeZone { get; set; }

}

Next we create a simple wrapper around the jira client provided by the Atlassian SDK.

The Jira client has built in functions mostly for getting issues or projects from the api. Luckily it exposes the underlying rest client so you can execute any request you want against the jira api. In the GetAllUsers method I am making a request to user/search?username={item} while iterating through the alphabet. This request will search the username, name or email address of the user object in jira. Since a username will likely contain more than one letter, as the results come back for each request there will be duplicates, so we have to check to make sure the user in the result set is not already in our list. Clearly this is not going to be the most performant method, however there is no other way to gather a full list of all users. Finally, we can create the jira helper wrapper and invoke the GetAllUsers method.

class Program

{

static void Main(string[] args)

{

var helper = new JiraApiHelper();

var users = helper.GetAllUsers();

}

}

Conclusion

As I stated above this solution is not going to be performant, especially if the Jira instance has a large number of users. However, if the need is to get the entire universe of users for the Jira instance then this is one approach that accomplishes that goal.

Starting an Azure Data Factory Pipeline from C# .Net

Introduction

Azure Data Factory (ADF) does an amazing job orchestrating data movement and transformation activities between cloud sources with ease. Sometimes you may also need to reach into your on-premises systems to gather data, which is also possible with ADF through data management gateways. However, you may run into a situation where you already have local processes running or you cannot run a specific process in the cloud, but you still want to have a ADF pipeline dependent on the data being processed locally. For example you may have an ETL process that begins with a locally run process that stores data in Azure Data Lake. Once that process is completed you want the ADF pipeline to being processing that data and any other activities or pipelines to follow. The key is starting the ADF pipeline only after the local process has completed. This post will highlight how to accomplish this through the use of the Data Factory Management API.

Prerequisites

- You will need an Azure Subscription. Free Trial

- Visual Studio. Visual Studio Community 2015

- A Data Factory you want to manually start from .Net

Continue reading “Starting an Azure Data Factory Pipeline from C# .Net”

Accessing Azure Data Lake Store from an Azure Data Factory Custom .Net Activity

04/05/2016 Update: If you are looking to use the latest version of the Azure Data Lake Store SDK (Microsoft.Azure.Management.DataLake.Store 0.10.1-preview) please see my post Upgrading to Microsoft.Azure.Management.DataLake.Store 0.10.1-preview to Access Azure Data Lake Store Using C# for what needs to be done to update the DataLakeHelper class.

Introduction

When working with Azure Data Factory (ADF), having the ability to take advantage of Custom .Net Activities greatly expands the ADF use case. One particular example where a Custom .Net Activity is necessary would be when you need to pull data from an API on a regular basis. For example you may want to pull sales leads from the Salesforce API on a daily basis or possibly some search query against the Twitter API every hour. Instead of having a console application scheduled on some VM or local machine, this can be accomplished with ADF and a Custom .Net Activity.

With the data extraction portion complete the next question is where would the raw data land for continued processing? Azure Data Lake Store of course! Utilizing the Azure Data Lake Store (ADLS) SDK, we can land the raw data into ADLS allowing for continued processing down the pipeline. This post will focus on an end to end solution doing just that, using Azure Data Factory and a Custom .Net Activity to pull data from the Salesforce API then landing it into ADLS for further processing. The end to end solution will run inside a Custom .Net Activity but the steps here to connect to ADLS from .net are universal and can be used for any .net application.

Prerequisites

- You will need an Azure Subscription. Free Trial

- Visual Studio. Visual Studio Community 2015

- (ADLS)Azure Data Lake Store

Continue reading “Accessing Azure Data Lake Store from an Azure Data Factory Custom .Net Activity”