Problem

Azure Data Lake Store (ADLS) gives you the ability to store all raw data in one location readily accessible for analysis. In my particular case I am pulling data from Salesforce and using the ADLS .net SDK to store the results in the data lake. If anyone has worked with Salesforce they know that it is possible to have an object with hundreds of custom fields, this leads to a file being stored in ADLS with hundreds of columns. One of the first translations I wanted to use U-SQL and Azure Data Lake Analytics (ADLA) for was to only evaluate a subset of the data by querying a few columns from the hundreds that might exist in a file.

An example script might look like this:

@tasks_raw =

EXTRACT AccountId string,

Id string,

OwnerId string,

Status string,

Subject string,

....

.... More Fields ....,

....

WhatCount int?,

WhatId string,

WhoCount int?,

WhoId string

FROM "/RawData/Salesforce/Task/2016/01/25/Task.csv"

USING Extractors.Csv();

@tasks_subset =

SELECT AccountId,

ActivityDate,

CreatedById,

CreatedDate,

Id,

OwnerId,

Status

FROM @tasks_raw;

OUTPUT @tasks_subset

TO "/Subset/Salesforce/Task/2016/01/25/Task.csv"

USING Outputters.Csv();

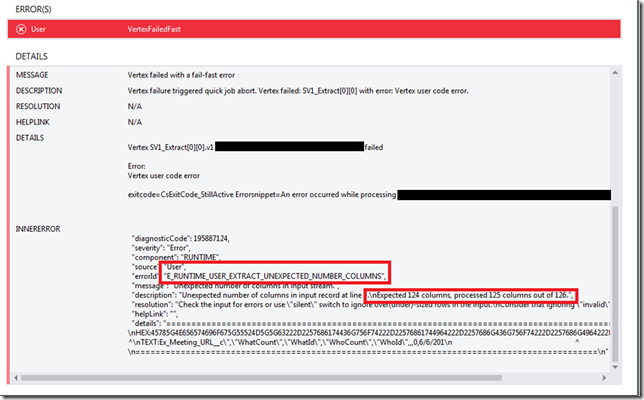

The first step is to impose a schema on top of the current file by using the “Extract” syntax, there by storing that into a variable. Then from the newly created variable I can select only a subset of columns which I need for later processing to store. The common problem I have run into is with the Extract portion of this script. I have frequently received the Unexpected Number of Columns error as seen in the screen shot below.

Solution

The most common cause of this error, especially when the input file is a CSV, is a “comma” in one of the data cells. After I completed all possible sanitization of the data before writing it to ADLS using the .net SDK I still received this error.

I began a dialog with the Azure Data Lake team, who informed me that using the ADLS .net SDK to create and append data to a file in ADLS could be causing a column alignment issue. If you are using the .net SDK to create a file and then append data to it, be aware there is a 4 MB limit on the request. This means that if you send a request with N number of rows and that batch is over 4 MB, the request will terminate in the middle of the record and not in a record boundary delimiter. The solution here is simple, I needed to add some logic to batch the rows to be stored in 4 MB or less chunks, ensuring that the final row would end on a record boundary.

Some sample code would look like this:

public void StoreData(string path, List rows, bool append)

{

var buffer = new MemoryStream();

var sw = new StreamWriter(buffer);

foreach (var row in rows)

{

if (buffer.Length + Encoding.UTF8.GetByteCount(row) > FOURMB)

{

buffer.Position = 0;

if (append)

{

execute_append(path, buffer);

}

else

{

execute_create(path, buffer);

append = true;

}

buffer = new MemoryStream();

sw = new StreamWriter(buffer);

}

sw.Write(row);

sw.Flush();

}

if (buffer.Length <= 0) return;

buffer.Position = 0;

if (append)

{

execute_append(path, buffer);

}

else

{

execute_create(path, buffer);

}

}

private AzureOperationResponse execute_create(string path, MemoryStream ms)

{

var beginCreateResponse = inner_client.FileSystem.BeginCreate(path, DataLakeAppConfig.DataLakeAccountName, new FileCreateParameters());

var createResponse = inner_client.FileSystem.Create(beginCreateResponse.Location, ms);

return createResponse;

}

private AzureOperationResponse execute_append(string path, MemoryStream ms)

{

var beginAppendResponse = inner_client.FileSystem.BeginAppend(path, DataLakeAppConfig.DataLakeAccountName, null);

var appendResponse = inner_client.FileSystem.Append(beginAppendResponse.Location, ms);

return appendResponse;

}

Conclusion

While working with any type of delimited file you no doubt have run into this unexpected number of columns error. This problem is exacerbated by the fact that you may have hundreds of columns in the file which makes it very difficult to track down. If you are using the ADLS SDK to store files in the data lake and you feel you have thoroughly gone through all other possible solutions, give this a shot. Either way it might be worth changing your storing pattern to avoid this problem on a future data file.